AI Agent Tools : Tutorial & Examples

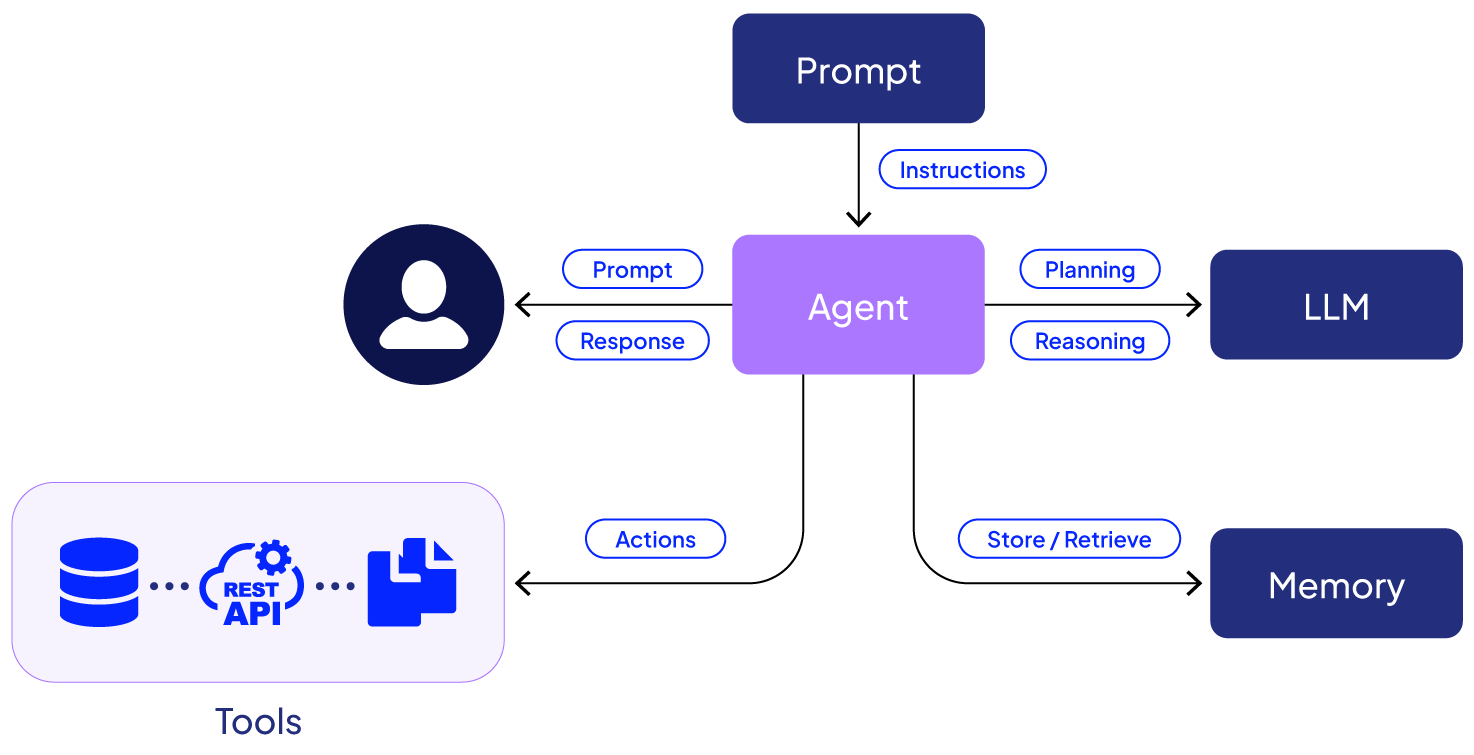

AI agents can make decisions and operate autonomously. They can reason, plan, and generate ideas. However, they require tools to interact with other real-world systems.

AI agent tools are functions that an agent calls to engage with its environment. These tools can take many forms, such as an API call, a database query, a web scraper, a process trigger, or even another AI agent. The definition is broad and covers any capability the agent can leverage to gather information, execute actions, or influence the system in which it operates.

AI agent tools serve as interfaces, enabling agents to interact with external systems. These may include databases, APIs, local file systems, or custom services. Traditional AI systems rely solely on pre-trained knowledge, whereas agents equipped with tools can access information in real-time, execute actions, and adapt to changing environments. This makes an agent an active entity capable of solving a problem more broadly than a passive information source.

This article explores the concepts, best practices, and implementation strategies for building robust tool-enabled agent systems.

Summary of best practices for using AI agent tools

Agents and tools

The difference between an agent workflow with and without tool access becomes apparent when comparing their capabilities in real-world scenarios.

Consider an agent answering questions about the current weather:

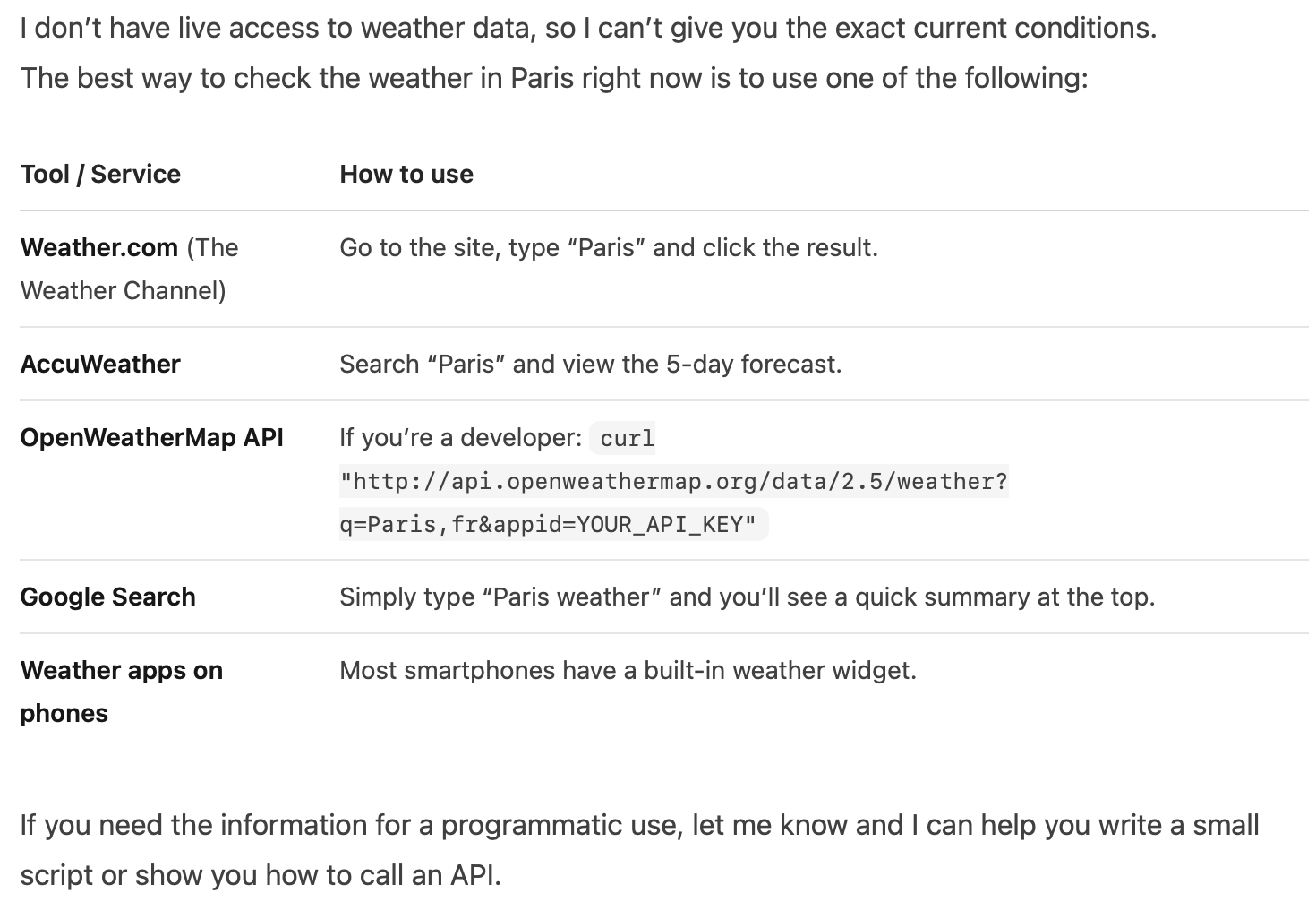

An AI agent without tools

User: "What’s the current weather in Paris?"

The agent responds that it does not have access to the current data and lists some tools/services that users have to access to solve the problem themselves.

An AI agent with tools

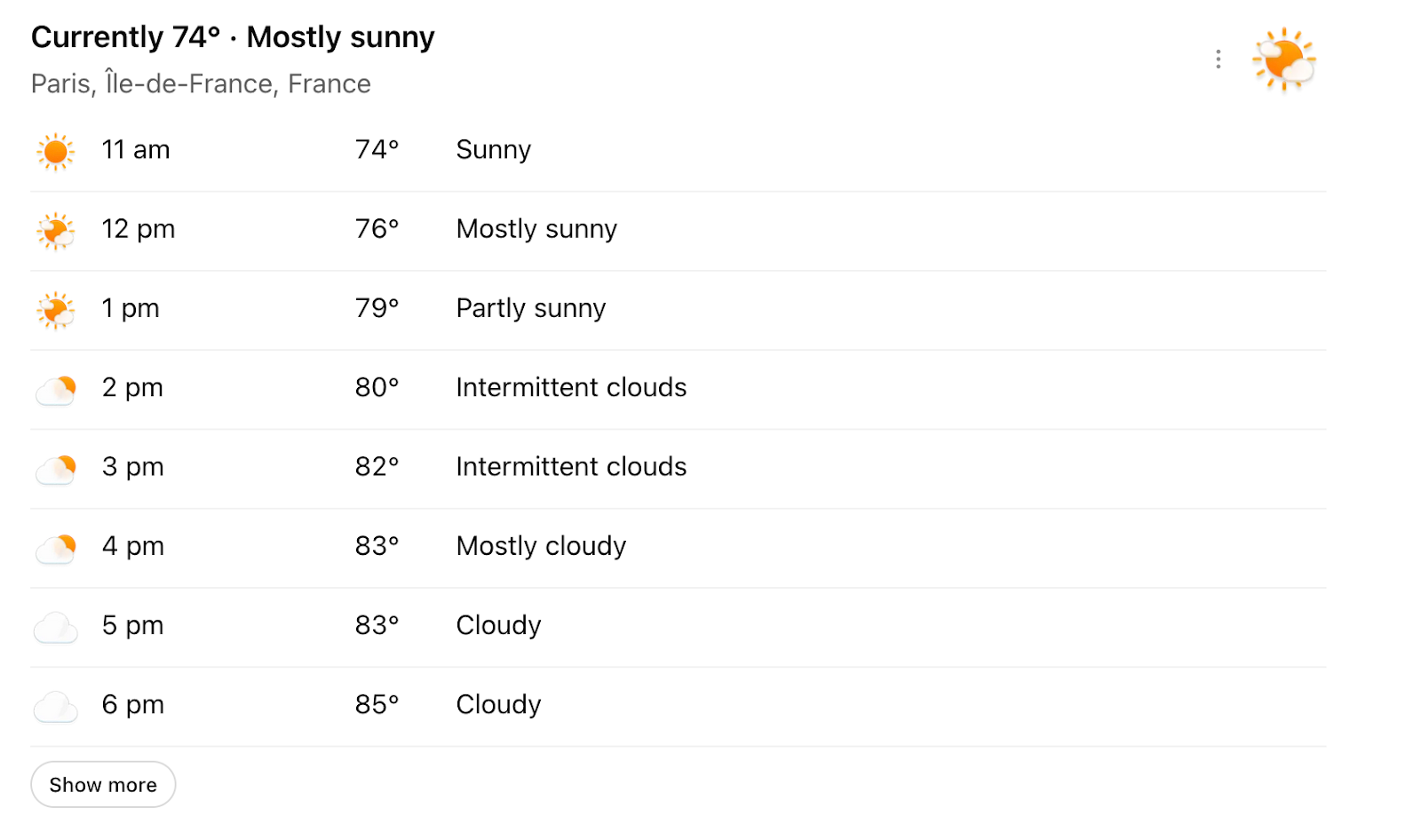

User: "What’s the current weather in Paris?"

The screenshot below shows the response to the above question when the agent has access to tools.

This comparison illustrates how tools can help the agent be up to date - in this case, by calling the weather API. The agent decides whether to invoke the tool to call the weather api or not, based on the user’s request.

Tools enhance the agents’ abilities by enabling real-time data access, automated action execution, and integration with existing business systems. They allow agents to interface with databases, make API calls to external services, send notifications, update records, and trigger complex business processes automatically. This capability enables agents to handle complete workflows rather than individual tasks, significantly increasing their practical value.

Defining a tool

Tool definition should follow declarative principles. They should focus on what to accomplish rather than how they operate. This abstraction enables the agents to understand tool capabilities without needing the implementation details, leading to a more flexible and maintainable system.

Modern libraries such as smolagents provide frameworks for standardizing tool definitions and handling common patterns. These tools can perform diverse functions beyond information retrieval, including executing SQL DML queries to update databases, sending automated notifications, triggering business workflows, or integrating with third-party services.

Below is an example of how smolagents can be used to define tools using the decorative patterns:

from smolagents import Agent, tool

from typing import Dict, Any

class ModernAgent(Agent):

def __init__(self):

super().__init__()

@tool

def get_weather(self, location: str) -> Dict[str, Any]:

"""

Get current weather for a location

"""

return {

# mock weather return

"location": location,

"temperature": "72°F",

"condition": "Sunny",

"humidity": "45%"

}

@tool

def calculate(self, expression: str) -> Dict[str, Any]:

"""

Evaluate a mathematical expression

"""

try:

result = eval(expression)

return {"result": result, "status": "success"}

except Exception as e:

return {"error": str(e), "status": "error"}

def run(self, message: str) -> str:

"""

Add your agent logic here that will use the tools

"""

# Your agent reasoning logic here

# Tools are automatically available via self.get_weather() and self.calculate()

pass

Role-aware tools

Access to tools is based on agent roles, requiring agents to have appropriate permissions. It prevents unauthorized actions and ensures both security and efficiency.

For example:

- A retriever agent queries the CRM database for customer details, but cannot modify records.

- Executor agent to update records and trigger emails, but cannot access sensitive analytics APIs.

The best practice is to start with minimal permissions. If the agent determines that it requires a restricted tool, it must request access and possibly seek approval from a human. The benefits of doing so include:

- Prevention of accidental misuse.

- Reduction of system load from unnecessary calls.

- Clearer mental models for large workflows.

{{banner-dark-small-1="/banners"}}

Tool selection and invocation

Effective tool selection uses both rule-based and prompt-based approaches, striking a balance between automation and flexibility. The choice of which one to employ depends on the task, its complexity, and the requirement for deterministic selection.

Rule-based selection

This approach uses predetermined logic to choose tools based on specific conditions or patterns. This approach works well for predictable workflows where the tool choice is clear based on input patterns or system state. For example, a rule might specify that any query containing a tracking number should trigger the shipping lookup tool.

class RuleBasedToolSelector:

def __init__(self):

self.tools = {

'shipping_lookup': ShippingLookupTool(),

'weather_check': WeatherTool(),

'calculator': CalculatorTool(),

'default': DefaultTool()

}

def select_tool(self, user_input: str) -> Tool:

"""Select tool based on predefined rules and patterns"""

# Rule 1: Shipping tracking queries

if any(pattern in user_input.lower() for pattern in ['track', 'shipping', 'delivery', 'package']):

return self.tools['shipping_lookup']

# Rule 2: Weather queries

if any(pattern in user_input.lower() for pattern in ['weather', 'temperature', 'forecast', 'rain']):

return self.tools['weather_check']

# Rule 3: Mathematical calculations

if any(char in user_input for char in ['+', '-', '*', '/', '=']) or \

any(word in user_input.lower() for word in ['calculate', 'compute', 'math']):

return self.tools['calculator']

# Default fallback

return self.tools['default']

# Usage example

selector = RuleBasedToolSelector()

user_query = "Track my package with tracking number 12345"

selected_tool = selector.select_tool(user_query)

# Returns: shipping_lookup toolPrompt-based selection

This approach enables agents to reason about tool choice based on context, goals, and available information, and then select the most suitable tool. It handles complex scenarios where multiple tools might be applicable, and the optimal tool choice depends on the context. The agent evaluates the situation and selects the most appropriate tool based on understanding the problem and the desired outcome.

class PromptBasedToolSelector:

def __init__(self, llm_client):

self.llm_client = llm_client

self.available_tools = {

'shipping_lookup': 'Look up shipping status and delivery information',

'weather_check': 'Get current weather and forecast data',

'calculator': 'Perform mathematical calculations',

'database_query': 'Query customer database for information',

'email_sender': 'Send automated emails'

}

def select_tool(self, user_input: str, context: dict = None) -> str:

""" Use reasoning to select the most appropriate tool"""

prompt = f"""

Given the user's request and available tools, select the most appropriate tool.

User Request: "{user_input}"

Context: {context or 'No additional context'}

Available Tools:

{self._format_tools()}

Instructions:

1. Analyze the user's request carefully

2. Consider the context and user's intent

3. Select the single most appropriate tool

4. Provide a brief reasoning for your choice

Response format:

Tool: [tool_name]

Reasoning: [brief explanation]

"""

response = self.llm_client.generate(prompt)

tool_name = self._extract_tool_name(response)

return tool_name

def _format_tools(self) -> str:

return '\n'.join([f"- {name}: {description}"

for name, description in self.available_tools.items()])

def _extract_tool_name(self, response: str) -> str:

# Extract tool name from AI response

for line in response.split('\n'):

if line.startswith('Tool:'):

return line.split(':')[1].strip()

return 'default'

# Usage example

llm_client = LLMClient() # Your AI model client

selector = PromptBasedToolSelector(llm_client)

user_query = "I need to check if my customer John Smith has any outstanding invoices"

context = {"user_role": "customer_service", "customer_id": "CS001"}

selected_tool = selector.select_tool(user_query, context)

# AI reasoning should select the 'database_query' tool

An agentic workflow typically includes a combination of rule-based and prompt-based tool selection.

Fallbacks

To create a robust system, one should consider implementing comprehensive fallback logic for failed tool invocations. When a primary tool fails, the system should attempt alternative approaches, provide meaningful error messages, and/or escalate to a human operator. This ensures the workflow continues even if one tool call/execution fails.

Tool execution patterns

The following are the common tool execution patterns:

Sequential execution

In sequential execution, an agent calls the tools one after another. The agent decides the order of calling and may review the results before calling the next tool. So, tool A → agent (optional) → tool B means tool A needs to finish executing, and its results are sent to tool B. This pattern enables a dynamic workflow construction, where the agent determines the optimal sequence of operations based on intermediate results and changing requirements.

Parallel execution

Multiple tools can execute simultaneously when tasks are independent of each other. This approach significantly reduces response times and works well for workflows involving multiple independent data sources or actions.

Agents must carefully identify which operations can be safely executed in parallel and handle potential conflicts or dependencies. If certain tools run independently of each other, then the developer can predetermine rules in advance.

For example, when an agent reviews code, a tool examining security vulnerabilities, and a tool identifying performance bottlenecks can run in parallel, if specified in the rules.

Tool chaining

Tool chaining focuses on the connection and flow between multiple tools to complete a larger task. In this pattern, agents pass intermediate results through multiple tools to facilitate sophisticated, multi-step problem-solving. This pattern works well for workflows where careful ordering is essential.

For example, the tool for getting customer information must be executed before sending an email. In tool chaining, the output of one tool is consumed by another, so the structure of the output and input of the two tools must be compatible.

Observability and logging

Observability is about tracing every tool call - who made it, when, why, and what was the result. It helps transform a black box system into transparent processes for continuous optimization. Effective logging captures tool invocations with sufficient detail to support debugging, optimization, and compliance requirements.

Essential logging elements may include the following:

- Which agent invoked each tool

- Timing information or execution duration.

- The reasoning or context that led to the particular tool selection

- Input parameters and output results

- Performance metrics

- Any errors or exceptions encountered during execution.

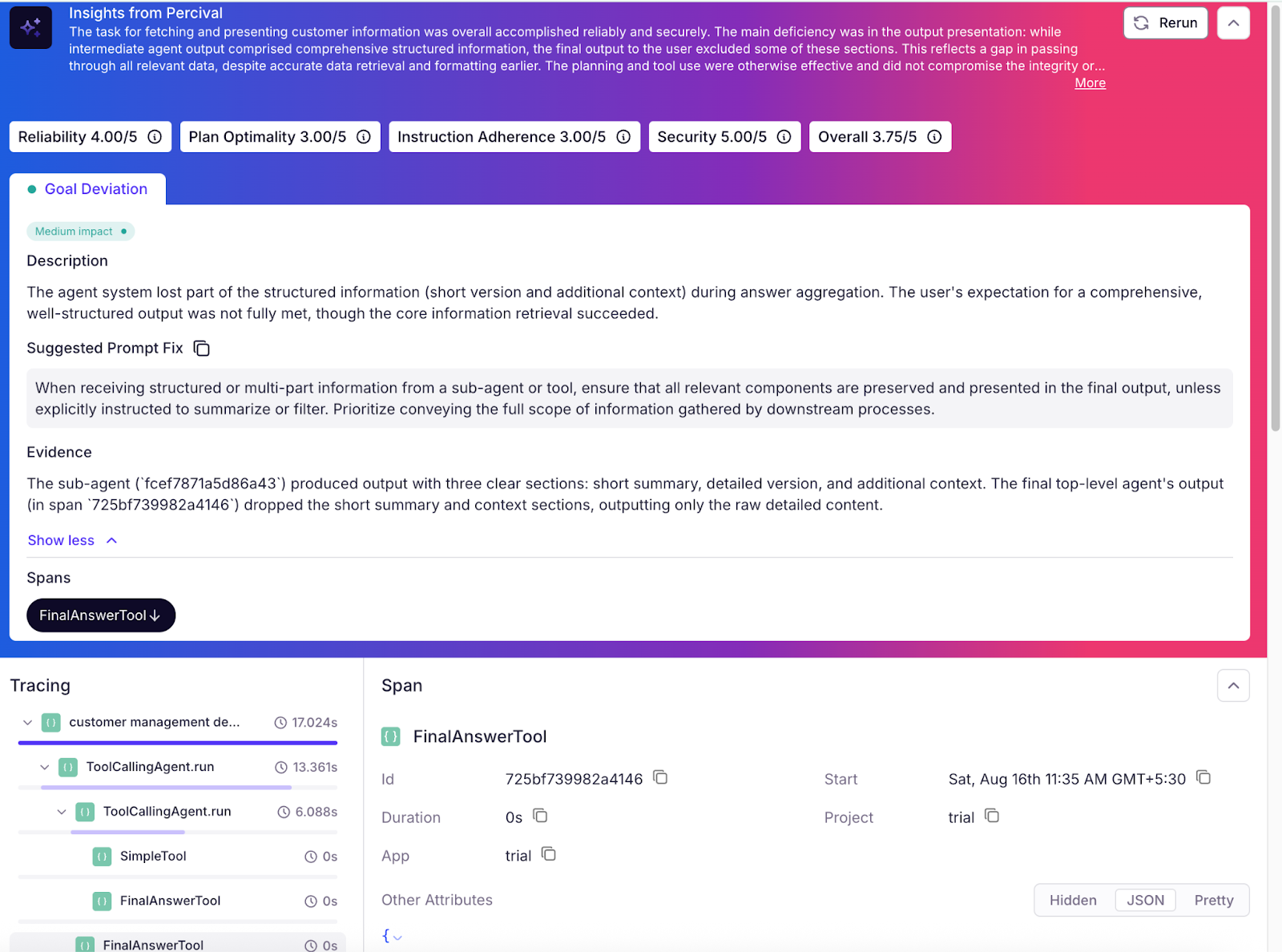

Modern observability platforms, such as Patronus AI, provide sophisticated traces of tool calls and usage. Patronus detects various tool errors, including invalid parameter formats, timeout failures, permission violations, and inappropriate tool selection patterns.

The following are some of the errors related to agent tool usage on the Patronus platform.

Reasoning errors

Reasoning errors can be related to hallucinations, information processing, or decision making.

Hallucinations

- Language-only: The agent fabricates content without using available tools when tools could provide accurate information

- Tool-related: The agent invents tool outputs or claims capabilities that tools don't actually possess

Information processing

- Poor information retrieval: Agent retrieves or cites information that is irrelevant to the current task or query

- Tool output misinterpretation: Agent misreads, misunderstands, or incorrectly applies the results returned by a tool

Decision making

- Incorrect problem identification: Agent misunderstands the overall objective or specific local task requirements.

- Tool selection errors: The agent chooses an inappropriate or suboptimal tool for the given job or context

System execution errors

System execution errors are related to configuration errors or resource management errors.

Configuration

- Tool definition issues: Tools are incorrectly declared or misconfigured (e.g., search tool declared as a calculator)

- Environment setup errors: Missing API keys, insufficient permissions, or other foundational setup problems

Resource management

- Resource exhaustion: System runs out of memory, disk space, or other critical computational resources

- Timeout issues: Model or tool execution exceeds allocated time limits and fails to complete.

Planning and coordination errors

Planning and coordination errors related to tool usage are often associated with context management.

Context management

- Context handling failures: Agent fails to retain, access, or correctly utilize relevant context information

- Resource abuse: Unnecessary, redundant, or excessive use of tools, APIs, or system resources

Effective observability enables continuous improvement through pattern analysis, performance optimization, and proactive error prevention. Features such as identifying frequently used tool combinations can be used to optimize common workflows or to detect anomalous behavior. These may indicate issues with either the agent call or the tool's usage/execution, allowing for early rectification.

See the example below of a customer management system using SmolAgents and Patronus AI tracing.

Let’s create a mock customer database:

# Mock customer database

CUSTOMER_DB = {

"CUST001": {

"id": "CUST001",

"name": "John Smith",

"email": "john.smith@email.com",

"phone": "+1-555-0123",

"status": "active",

"subscription_tier": "premium",

"join_date": "2023-01-15",

"last_activity": "2024-06-01"

},

"CUST002": {

"id": "CUST002",

"name": "Alice Johnson",

"email": "alice.johnson@email.com",

"phone": "+1-555-0456",

"status": "active",

"subscription_tier": "basic",

"join_date": "2023-03-22",

"last_activity": "2024-05-28"

},

"CUST003": {

"id": "CUST003",

"name": "Bob Wilson",

"email": "bob.wilson@email.com",

"phone": "+1-555-0789",

"status": "inactive",

"subscription_tier": "premium",

"join_date": "2022-11-10",

"last_activity": "2024-02-15"

}

}

Now, let’s define a few tools to use with the database:

@tool

def get_customer_info(customer_id: str) -> str:

"""

Retrieves detailed information about a specific customer.

Args:

customer_id: The unique customer ID (e.g., "CUST001")

"""

if customer_id not in CUSTOMER_DB:

return f"Customer {customer_id} not found in database."

customer = CUSTOMER_DB[customer_id]

return json.dumps(customer, indent=2)

@tool

def search_customers_by_name(name: str) -> str:

"""

Searches for customers by name (partial matches allowed).

Args:

name: Customer name or partial name to search for

"""

results = []

for customer_id, customer in CUSTOMER_DB.items():

if name.lower() in customer["name"].lower():

results.append({

"id": customer_id,

"name": customer["name"],

"email": customer["email"],

"status": customer["status"]

})

if not results:

return f"No customers found with name containing '{name}'"

return json.dumps(results, indent=2)

@tool

def update_customer_status(customer_id: str, new_status: str) -> str:

"""

Updates a customer's status.

Args:

customer_id: The unique customer ID

new_status: New status ("active", "inactive", "suspended")

"""

if customer_id not in CUSTOMER_DB:

return f"Customer {customer_id} not found in database."

valid_statuses = ["active", "inactive", "suspended"]

if new_status not in valid_statuses:

return f"Invalid status '{new_status}'. Valid statuses are: {', '.join(valid_statuses)}"

old_status = CUSTOMER_DB[customer_id]["status"]

CUSTOMER_DB[customer_id]["status"] = new_status

return f"Customer {customer_id} status updated from '{old_status}' to '{new_status}'"

Next, create a customer agent:

def create_customer_agents(model_id):

"""Create specialized customer management agents"""

# Customer Information Agent

customer_info_model = LiteLLMModel(model_id, temperature=0., top_p=1.)

customer_info_agent = ToolCallingAgent(

tools=[get_customer_info, search_customers_by_name, update_customer_status],

model=customer_info_model,

max_steps=10,

name="customer_info_agent",

description="This agent specializes in retrieving, searching, and updating customer information"

)

# Support Ticket Agent

support_model = LiteLLMModel(model_id, temperature=0., top_p=1.)

support_agent = ToolCallingAgent(

tools=[get_customer_support_tickets, create_support_ticket],

model=support_model,

max_steps=10,

name="support_ticket_agent",

description="This agent handles customer support tickets - retrieving existing tickets and creating new ones"

)

# Analytics Agent

analytics_model = LiteLLMModel(model_id, temperature=0., top_p=1.)

analytics_agent = ToolCallingAgent(

tools=[get_customer_analytics],

model=analytics_model,

max_steps=10,

name="analytics_agent",

description="This agent provides customer analytics and business intelligence insights"

)

# Create a manager agent with all specialized agents

manager_model = LiteLLMModel(model_id, temperature=0., top_p=1.)

manager_agent = ToolCallingAgent(

model=manager_model,

managed_agents=[customer_info_agent, support_agent, analytics_agent],

tools=[],

add_base_tools=False,

name="customer_manager",

description="Customer management coordinator that delegates tasks to specialized agents"

)

return manager_agent

Finally, run a customer management demo:

@patronus.traced("customer management demo 1")

async def run_customer_management_demo():

"""Run a comprehensive customer management demonstration."""

agent = create_customer_agents("openai/gpt-4o-mini")

print("Customer Management System Demo\n")

# Demo 1: Customer lookup

print("=== Demo 1: Customer Information Lookup ===")

result1 = agent.run("Find information about customer CUST001")

print(f"\nResult: {result1}\n")

return result1

Upon running the above, we can obtain a tracing of the tools called and other analytics using the Patronus tracing, as shown in the screenshot below.

Using the Percival AI debugger from Patronus AI, you can verify that it correctly calls the get customer information tool and gives prompt fix suggestions to ensure the output structure remains intact.

A full and extended version of the above code, including an added dataset, tools, and demo, is available in the notebook. Sign up for Patronus AI (you get an initial $10 credit to try things on it) and create a .yaml file inserting the patronus api key. You can see the detailed tracing of tool usage with information you can use to further optimise your tools, queries, and prompts.

Guardrails and fallback behavior

Tools can fail due to API timeouts, malformed inputs, or empty response data. Without guardrails, a single failure can disrupt the whole workflow. Robust agent systems implement multiple layers of protection against failures. These safeguards ensure the system's reliability.

Structured validation

Validating input and output data using schemas ensures data integrity throughout tool execution. This includes parameter type checking, range validation, format verification, and enforcement of business rules. Tools should validate inputs before execution and sanitize outputs before returning results to agents.

Error handling and resilience

Comprehensive try/catch blocks, timeout implementations, and retry logic prevent individual tool failures from cascading into system-wide issues. Timeout mechanisms ensure tools don't hang indefinitely, while intelligent retry logic overcomes temporary failures in external systems.

Edge case management

Tools should provide meaningful feedback to agents when they encounter edge cases, such as empty result sets, malformed external data, network connectivity issues, and rate limiting from external services. This allows agents to take appropriate alternative actions.

Rate limits and usage controls

Systems should monitor tool usage patterns and implement controls to prevent excessive API calls, database queries, or resource consumption. For example, it's typically an error if an agent makes ten separate database read requests when a single query would suffice. Advanced systems can detect such patterns and either optimize automatically or flag them for review. Intelligent rate limiting prevents both accidental misuse and potential abuse.

Human-in-the-loop fallbacks

Critical workflows should include escalation paths to human operators when automated processes encounter issues that cannot be resolved. Such human-in-the-loop fallbacks ensure system reliability while also maintaining the efficiency benefits of automation for routine operations.

Testing guardrails with RL environments

Reinforcement learning (RL) environments provide a controlled way to test how agents interact with tools under different scenarios. Instead of exposing unverified agents directly to production systems, RL environments simulate realistic tasks and edge cases. This makes it possible to validate guardrails, fallback logic, and compliance rules in a safe sandbox before deployment.

Patronus AI offers domain-specific RL environments replicating common workflows such as coding tasks, database queries, or customer interactions. These environments help teams confirm that agents respect validation schemas, handle failures gracefully, and follow established policies. By rehearsing in an RL environment first, organizations can reduce risks and deploy agents with greater confidence.

{{banner-dark-small-1="/banners"}}

Conclusion

AI agents can achieve their full potential when equipped with well-designed tools that extend the agents' capabilities into real-world actions and data access. Declarative tool definition and role-aware access control, combined with comprehensive observability and robust fallback mechanisms, form a solid foundation for building reliable and scalable agent systems.

As AI agents become increasingly central to many operations, implementing a robust tool ecosystem becomes crucial to achieving intelligent and adaptive systems. Tools are the bridge that transforms artificial intelligence from a passive, rich information source to an active decision-making and action-taking agent.

%201.avif)