RL Environments: Tutorial & Examples

Reinforcement learning (RL) is a branch of AI where an agent learns by trial and error in an interactive setting. The agent takes actions in an environment, and the environment provides feedback in the form of rewards or penalties. Over time, the agent adapts its strategy to maximize the cumulative reward.

RL environments are the simulated “worlds” in which agents operate. They define what the agent sees (state), what it can do (actions), and how it is evaluated (rewards and termination conditions). In classic settings, an environment might be a video game, a grid world, or a robotics simulator. Recently, however, RL environments have been extended to GenAI and LLM use cases, for example, simulating a web browser for an AI agent or enforcing formatting rules for a conversational chatbot.

This article explores RL environments, explaining their core components and why they are important. We start with the basics of RL and traditional environments, then quickly shift focus to RL environments for LLM-based applications. Along the way, we implement a simple example environment in Python to illustrate how environments work in code.

Summary of key RL environment concepts

Why use reinforcement learning?

Before we discuss what RL environments are, it is essential to understand the problem that reinforcement learning aims to solve.

RL is best suited for situations where the correct sequence of actions isn’t explicitly known in advance: The agent must discover it through trial and error, learning from positive and negative feedback to maximize long-term success. A prime example in the context of LLMs is their alignment process, which utilizes reinforcement learning and human feedback to ensure that AI systems behave in ways consistent with human preferences.

Problems with SFT and RLHF

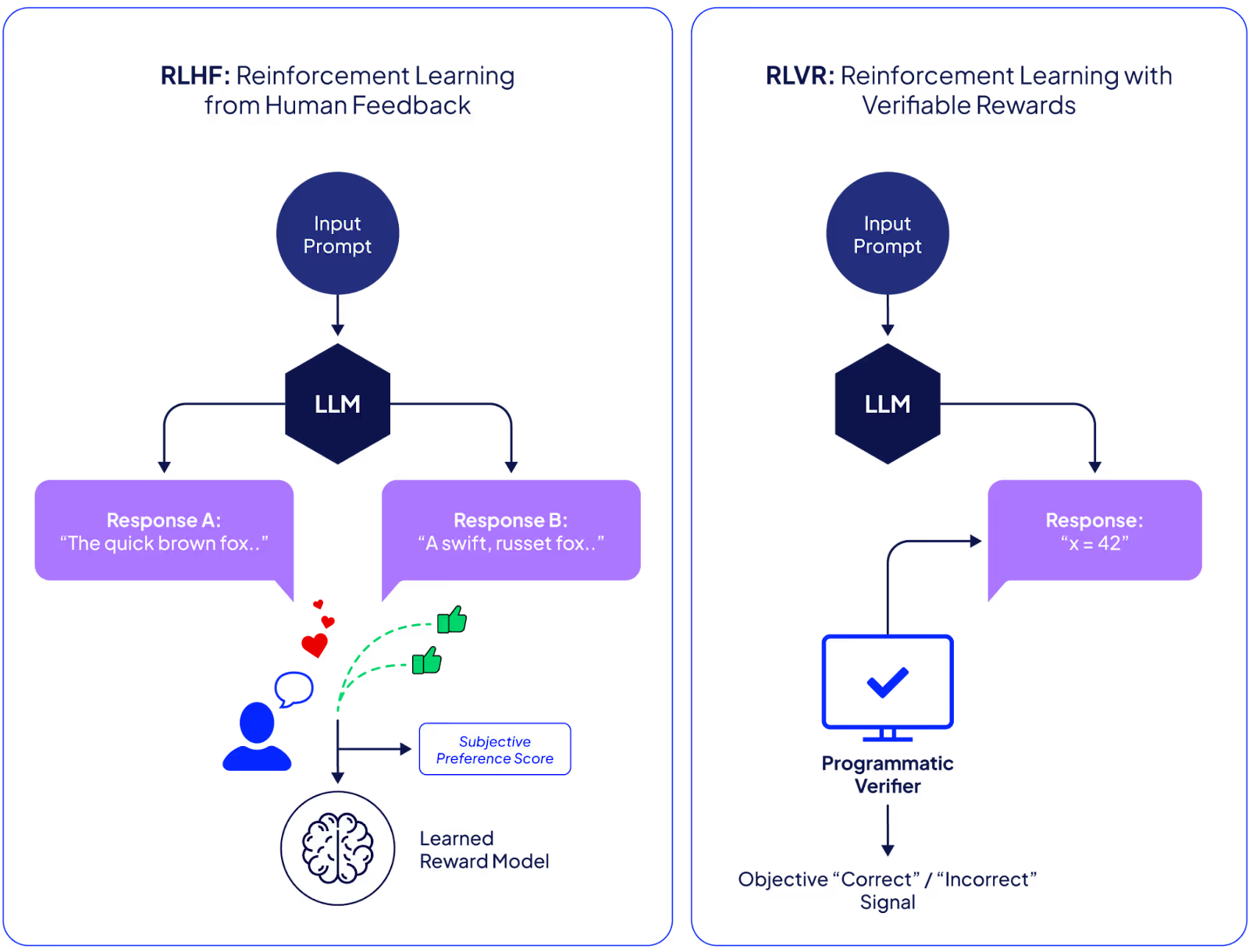

In the past, supervised fine-tuning (SFT) and reinforcement learning with human feedback (RLHF) have been used to train agents.

SFT trains a model on labeled examples, enabling it to reproduce correct answers from the data. However, it often struggles with open-ended or multi-step tasks where there’s no single right answer, such as explaining reasoning or handling ambiguous user queries.

This is where RLHF came into play. RLHF was used to train models like ChatGPT to be more honest, helpful, and safe by optimizing them against human preference judgments rather than a static dataset. In effect, RLHF aligns the model’s behavior with what human evaluators prefer, surpassing the capabilities of supervised learning on example dialogues.

However, RLHF also has its limitations. It relies on humans to provide feedback, which is slow, costly, and sometimes inconsistent. The model’s performance also depends upon the quality of the human evaluations: It can only get as good as the signals given to it. As AI systems become more capable and are deployed in more complex, long-horizon tasks, constant human-in-the-loop feedback becomes a bottleneck.

Challenges for LLM agents

The problem with SFT and RLHF becomes even more evident during the training of LLM-based agents. Today’s agents might be asked to use tools, browse the web, write code, or carry out multi-step workflows autonomously. They need to be accurate, follow instructions over long conversation histories, and use external APIs correctly while avoiding pitfalls like hallucinations or biased outputs. Hard-coding these behaviors is impractical, and supervised training data may not cover the nuanced failures that occur when an agent operates independently.

Without RL, an agent might easily misuse a tool or get distracted mid-task, since there’s no direct penalty for those mistakes in a pure language modeling objective.

The “DeepSeek moment”: A new era of RL for LLMs

New research indicates that it is possible to replace or supplement human feedback with automated feedback signals. Instead of humans rating each outcome, the team can design an RL environment that automatically checks if the agent’s output meets specific criteria.

A notable recent example is DeepSeek, a project that demonstrated the potential of this approach. DeepSeek’s team trained an LLM-based research agent using a fully automated pipeline with no human-labeled data, allowing the agent to learn through trial and error in a stable simulated environment. They used a copy of Wikipedia as a sandbox for the agent to explore, and they employed internal metrics to reward good outputs. The result was a model that achieved high marks on research tasks at a fraction of the training cost of more traditional approaches.

This “DeepSeek moment” demonstrated to the AI community that RL environments with programmatic rewards could unlock rapid and scalable training, essentially pointing the way to align and improve AI agents beyond what human feedback alone could achieve. The impact has been immediate: All the major AI labs are now investing heavily in RL environments and simulations for training agents. Rather than labeling millions of static examples, companies are building rich environments where an AI can practice and be evaluated on the fly.

What are RL environments?

In reinforcement learning, the environment is effectively the task simulator: everything outside the agent that responds to the agent’s actions. At its core, an RL environment provides the agent with a state (or observation), and when the agent takes an action, the environment returns a new state along with a reward. The environment defines the dynamics and the goal of the task. It is where the encoding of the problem that the agent is to solve occurs.

What’s new today is that RL environments are not limited to physical control tasks or games. It’s possible to build environments that simulate software, tools, or even multi-step reasoning tasks. For example, if you want to train an AI agent to use a web browser to buy socks, you can create an environment that simulates a browser and website. The agent’s actions might be things like “click button” or “enter text,” and the environment would render the next page and perhaps give a reward when the purchase is successfully made.

When to use RL environments

It is important to note that you do not always need RL environments to train. For example, it is better to use SFT techniques for one-shot tasks like spam detection and sentiment analysis or when high-quality labeled data is available.

Here are some specific scenarios where RL environments should be used.

Training/testing an agent on a dynamic task

Environments provide a safe, controlled space for the agent to practice whenever an AI agent needs to interactively learn or be evaluated on a dynamic task, especially when the task involves a sequence of decisions that is too complex or variable to preprogram manually. This is useful for training the agent, which can try various strategies in simulation and receive automatic rewards rather than needing a human to grade each attempt. It’s equally useful for testing and evaluation: You can check if a trained agent handles edge cases by seeing how it behaves in the environment scenarios.

Availability of an automated reward signal

RL environments are also useful in scenarios where it is possible to formalize what is expected from the agent in terms of an automated reward. If you can define a clear measure of success for the task (something you can code to check), you can build an RL environment to train the agent to achieve it.

For example, suppose the task is to generate SQL queries that run without errors and return the correct results from a database. In that case, the environment can automatically execute the query the agent produces. If it runs and matches the expected answer, the agent gets a reward; if it produces a SQL syntax error or an incorrect result, it receives a low or zero reward. This automatic, verifiable feedback is extremely valuable because it removes the need for constant human supervision once the environment is set up.

Components of RL environments

An RL environment can be fully described by a Markov Decision Process (MDP). The environment includes the state (what the agent observes), the action space (what it can do), the reward function (how it is scored), the transition dynamics (how the world changes), and the termination condition (when the interaction ends).

State

The state or observation is what the agent “sees” at a given time. It captures the relevant information about the environment at that moment. For example, in a customer support bot environment, the state could be the conversation history, while for a coding agent, the state might be the programming task description plus the code written so far.

The goal is to capture enough context for the model to plan its next move. For example, a customer support bot may not need the entire database schema; it only requires the customer query and the account details. Similarly, a coding agent doesn't need the entire internet as context, only access to the problem, the codebase, and a tool that accesses GitHub, etc.

Action and action space

The action space defines what the agent is allowed to do. With LLM agents, actions are often language outputs or tool calls. For example, a text-only assistant might have an action space consisting of sequences of tokens, and it “acts” by generating text responses using these tokens. Similarly, a tool-calling agent could have a discrete action space, such as {“call database”, “search web”, “respond to user”} with parameters attached to each action.

The challenge is balancing realism with practicality. If you let an agent type arbitrary text into a SQL interface, it could try millions of nonsense queries. Instead, many environments define structured actions: The agent must output JSON specifying the table and columns to query. This keeps the action space clean and makes evaluation straightforward.

Reward and reward function

The reward tells the agent whether it did well or poorly. Designing rewards is where environments either succeed or fail. For example, in a code-writing environment, the reward can be measured by the number of unit tests that pass. In a Q&A setting, the reward might be +1 if the answer matches the ground truth and zero if not.

The golden rule is to use verifiable signals whenever possible. For instance, if the task is “generate valid JSON,” don’t rely on a human to check it. Instead, parse the output directly using an LLM or any automatically verifiable heuristic approach and give +1 for valid, 0 for invalid. This kind of deterministic feedback makes environments robust.

Transition dynamics

Transition dynamics describe how the state changes after an action is taken. In LLM environments, transitions typically involve changes in conversation flow, tool outcomes, or world updates. For instance, in a customer service bot, if the agent asks for an order number, the environment (simulating a user) might respond with one. The next state then includes that number.

The environment designer decides these rules. Good environments anticipate edge cases; for example, what if the bot asks the same question twice, or produces nonsense SQL? A robust environment won’t crash—it will respond with a defined state like “invalid action,” along with a penalty.

Episodes and termination

An episode is one full run of the interaction, from start to finish. For example, it could be a single Q&A pair where the episode ends as soon as the agent gives its answer and the environment assigns a reward for correctness, or a coding challenge where the episode continues until the agent submits a final solution or exceeds a maximum number of steps.

Episodes are useful because they define boundaries for learning. They give the agent many fresh starts and clear signals of success or failure. Without them, an agent could wander endlessly with no chance to reset and try new strategies.

Learning mechanism

This is one of the most challenging aspects of an RL environment for LLM applications. Deciding how the agent learns from the environment depends totally on your use case.

In traditional RL settings, model parameters are fine-tuned using techniques such as policy gradients, proximal policy optimization (PPO), or Q-learning to maximize the expected cumulative reward. However, in LLM-based environments, full gradient-based training is often too costly or impractical. Instead, lightweight statistical approaches and prompt optimization techniques are used. Here are some examples:

- Reinforcement learning with prompt feedback, which means iteratively refining the system prompt based on the reward score from each episode (as you will see later in the tutorial section).

- Self-reflection loops, where the model evaluates its own output and rewrites the instructions to reduce mistakes in future runs.

- Evolutionary strategies such as generating multiple prompt variants, scoring them using the reward function, and selecting the best-performing version

{{banner-large-dark-2-rle="/banners"}}

RL environment implementation example

Enough of the theory, let’s see how to create an RL environment and implement reinforcement learning for a simple chatbot agent that helps a user log into their account and check their balance. This tutorial will demonstrate all the components of an RL environment in action.

We will create a chatbot using the LangGraph library that uses the OpenAI GPT-4 model for reasoning. You can find the code for this article in this Google Colab notebook.

Note: For real-world scenarios, you can use libraries like HuggingFace TRL and Gymnasium to implement various reinforcement learning techniques.

Installing and importing required libraries

The following script installs the required libraries to run the code.

!pip install -qU langchain-openai

!pip install -qU langgraph

The script below imports the required libraries into your Python application.

from google.colab import userdata

OPENAI_API_KEY = userdata.get('OPENAI_API_KEY')

from langgraph.prebuilt import create_react_agent

from langchain_openai import ChatOpenAI

from typing import TypedDict, Annotated

from langchain_core.messages import BaseMessage

from langchain_core.messages import HumanMessage, SystemMessage

from langchain_core.messages import AnyMessage

from langgraph.graph import add_messages

Defining environment components

Next, we will define all the components of our RL environment.

State

We will keep the state simple: It will consist of the conversation history.

class AgentState(TypedDict):

messages: Annotated[list[AnyMessage], add_messages]

remaining_steps:int # use default from LangGraph

Note that “remaining_steps” is only here for LangGraph’s tool calling retry limit.

Actions

We allow the chatbot to perform four actions: log in a user, verify two-factor authentication, retrieve a balance, and log the user out. We can store these actions in a Python list.

expected_actions = [

"login_user",

"verify_2fa",

"get_balance",

"logout_user"

]

Reward function

We will use a straightforward reward function. For each agent response, we check whether it invoked the correct tool from the predefined action list and followed the expected sequence. The agent performs these actions through tool calls.

For example, the first tool call should log the user in, the second should verify two-factor authentication and retrieve the balance, and, finally, the logout should only occur if the user asks for it. If the agent logs out immediately after showing the balance, it receives a penalty. The reward increases as the agent completes each step correctly. Notice that the rewards are automatically verifiable and do not need human intervention for verification.

def compute_reward(called_tools):

"""

Computes reward based on logical sequence:

1 -> login_user

3 -> verify_2fa and get_balance (after login)

4 -> logout_user (after previous steps)

-1 penalty -> if logout_user is called immediately after get_balance

(i.e., in same step before user interaction)

"""

# Normalize tool names

called = [t.lower() for t in called_tools]

reward = 0

# Step 1: login

if any("login" in t for t in called):

reward = 1

# Step 2: after login, 2FA + balance retrieval

if any("verify" in t for t in called) and any("balance" in t for t in called):

reward = 3

# Step 3: logout AFTER user has seen balance (not same step)

# If logout appears alongside get_balance, penalize (-1)

if any("logout" in t for t in called):

# Penalize if logout is called in the same turn as get_balance

if called.index("logout_user") > called.index("get_balance") and (

len(called) - called.index("get_balance") == 1

):

reward = -1 # Immediate logout penalty

else:

reward = 4

return reward

Transition dynamics

The transition dynamics will simply involve adding the user and assistant messages to the agent’s state, i.e., conversation history.

def transition(state, message:AnyMessage):

state["messages"].append(message)

return state

Episode termination

Finally, we terminate one RL episode if an agent or the user says any keyword such as “thank you,” “bye,” “goodbye,” “logged out,” or “session ended.” Note that in real-world scenarios, you will likely use another LLM to track whether an episode is terminated after every user interaction with the agent.

def check_episode_termination(messages):

# Get the most recent message

last_message = messages[-1]

text = last_message.content.lower()

end_signals = ["thank you", "bye", "goodbye", "logged out", "session ended"]

return any(signal in text for signal in end_signals)

Learning mechanism

We will utilize reinforcement learning with a prompt feedback approach to guide the model in maximizing the reward. To do so, we will define a simple function that receives the existing system prompt for our chatbot along with the reward score and the possible action that it can take. The function will use an LLM to modify the prompt based on the reward score and the available actions.

The rules in the prompt that modify the system prompt depend on the reward function that you want to maximize. In an ideal scenario, you will also pass the model input and output to your learning mechanism. However, in the following script, we keep our prompt simple and ask it to modify the system prompt based purely on the reward score and available actions.

llm = ChatOpenAI(model="gpt-4o", api_key=OPENAI_API_KEY)

def update_prompt(reward_score, existing_prompt, actions_list):

learning_prompt = f"""

You are a reinforcement learning (RL) trainer helping another AI agent improve its system prompt.

The agent performs specific actions during an episode and receives a reward score out of 3.

Your task is to update the system prompt so that the agent performs better (achieves the maximum reward of 3/3).

Follow these steps carefully:

1️⃣ **Understand the context**

- Read the existing system prompt.

- Read the actions the agent can perform.

- Read the reward score (lower = needs significant improvement, higher = minor refinement).

2️⃣ **Analyze weaknesses**

- Identify what might be missing, ambiguous, or poorly phrased in the existing prompt that could lead to suboptimal tool usage or wrong sequencing.

- Consider how to make the prompt clearer about the logical order of actions.

3️⃣ **Plan improvements**

- If the reward score is low (0-1): make strong structural and behavioral changes.

- If the reward score is moderate (2): refine existing instructions, clarify the step order, or add missing constraints.

- If the reward score is high (3): make minimal or no changes; only polish the wording if needed.

4️⃣ **Rewrite the prompt**

- Write a clear, professional, and concise updated system prompt.

- Emphasize performing actions step by step, not all at once.

- Maintain logical order of actions and clarify expected behavior where needed.

5️⃣ **Output format**

- Return **only** the updated system prompt text -- do not include explanations or analysis.

---

**Inputs:**

- Reward Score: {reward_score}

- Existing Prompt:

{existing_prompt}

- Actions List:

{actions_list}

Now, produce the improved system prompt following the above reasoning steps.

"""

updated_prompt = llm.invoke(learning_prompt).content

return updated_prompt

Training an agent in an RL environment

We now have all the components for training an agent in an RL environment. The next step is to actually create our agent and train it via reinforcement learning.

As discussed earlier, the agent will have access to four tools corresponding to four actions it can take. The following script defines these tools:

def login_user(username: str) -> str:

"""

Log the user into their account.

"""

return f"{username} successfully logged in."

def verify_2fa(code: str ) -> str:

"""

Verify the user's identity with a 2FA security code.

"""

return "2FA verification passed."

def get_balance() -> str:

"""

Retrieve the user's current account balance.

"""

return "Your account balance is $10,000."

def logout_user() -> str:

"""Safely log the user out of the system when the user asks to log him out."""

return "Logged out successfully."

Note that the tool above returns dummy values. In real-world use cases, these tools will likely make database and authentication calls to external APIs.

Next, we will define the initial system prompt and create a LangGraph React agent for our chatbot. This system prompt will be updated by the training loop to maximize reward.

system_prompt = """

You are an account management assistant.

The user may need to:

1. log in,

2. verify 2FA,

3. check their account balance, and

4. log out.

Call all the tools for one user query to get responses.

"""

def get_agent(system_prompt):

agent = create_react_agent(

model=llm,

tools=[login_user, verify_2fa, get_balance, logout_user],

prompt= system_prompt,

state_schema=AgentState

)

return agent

We can now test our RL environment. We will pose some questions to the chatbot, and after each episode, check if the reward is less than 4, which means either all the actions were not performed or they were not performed in the desired order. If it is less than 4, we update the system prompt.

The logic above is implemented via two while loops. The external while loop checks if the user wants to start another episode for RL training; the internal while loop is used to generate a multi-step conversation between the user and the chatbot. The following script implements this logic:

while True:

# Initialize agent

agent = get_agent(system_prompt)

agent_state = {"messages": [], "remaining_steps": 10}

called_tools = [] # Track all tools used

total_reward = 0

step = 0

# Start conversation

user_input = input("👤 User: ")

agent_state = transition(agent_state, HumanMessage(content=user_input))

while True:

print(f"\n--- Step {step + 1} ---")

result = agent.invoke(agent_state)

assistant_reply = result["messages"][-1].content

print(f"🤖 Assistant: {assistant_reply}")

# Extract ALL tool calls

tool_names = []

for msg in result["messages"]:

if hasattr(msg, "tool_calls") and msg.tool_calls:

for call in msg.tool_calls:

tool_names.append(call["name"])

if tool_names:

# Add and deduplicate

called_tools.extend(tool_names)

called_tools = list(dict.fromkeys(called_tools))

# Compute base reward with all tools

reward_with_all = compute_reward(called_tools)

# === HANDLE PENALTY CORRECTLY ===

if reward_with_all == -1:

# Recompute baseline WITHOUT logout to capture the true progress (should be 3)

called_without_logout = [t for t in called_tools if "logout" not in t.lower()]

baseline_reward = compute_reward(called_without_logout)

# Deduct exactly 1 point from the baseline (e.g., 3 -> 2)

total_reward = max(baseline_reward - 1, 0)

print("🔴 Penalty applied: Logged out immediately after showing balance.")

else:

total_reward = reward_with_all

# Debug info

print(f"🧩 Debug - Called tools list: {called_tools}")

print(f"🛠️ Tools called: {', '.join(tool_names)}")

# === DISPLAY PROGRESS ===

if total_reward == 1:

print("🟢 Reward Progress: 1 / 4 -- Step 1 complete: User logged in successfully.")

elif total_reward == 2:

print("🟠 Reward Progress: 2 / 4 -- Step 2 complete but penalty applied (premature logout).")

elif total_reward == 3:

print("🟡 Reward Progress: 3 / 4 -- Step 2 complete: Verification and balance retrieval successful.")

elif total_reward == 4:

print("🟢 Reward Progress: 4 / 4 -- Step 3 complete: Logout done -- full task successfully completed!")

else:

print(f"🎯 Reward Progress: {total_reward} / 4")

else:

print("No tool call detected this turn.")

# Append assistant message

last_message = result["messages"][-1]

agent_state = transition(agent_state, last_message)

# Step limit safety

agent_state["remaining_steps"] -= 1

if agent_state["remaining_steps"] <= 0:

print("\n⚠️ Step limit reached. Ending episode.")

break

# Next user input

user_input = input("\n👤 User: ")

if not user_input.strip():

print("No user input. Ending episode.")

break

# Append new user message

agent_state = transition(agent_state, HumanMessage(content=user_input))

# Termination check

if check_episode_termination(agent_state["messages"]):

print("\n🔚 Episode terminated by user.")

break

step += 1

# === END OF EPISODE ===

print(f"\n🏁 Episode finished with total reward: {total_reward}")

# === UPDATE PROMPT BASED ON REWARD ===

if total_reward < 4:

print("\n🧠 Updating system prompt based on reward...")

agent_state = {"messages": [], "remaining_steps": 10}

new_prompt = update_prompt(total_reward, system_prompt, expected_actions)

system_prompt = new_prompt

print("\n✨ Updated System Prompt:\n", system_prompt)

else:

print("\n✅ Perfect reward achieved! No prompt update needed.")

# Restart option

restart = input("\nStart another episode? (y/n): ").lower().strip()

if restart != "y":

print("👋 Ending training session.")

break

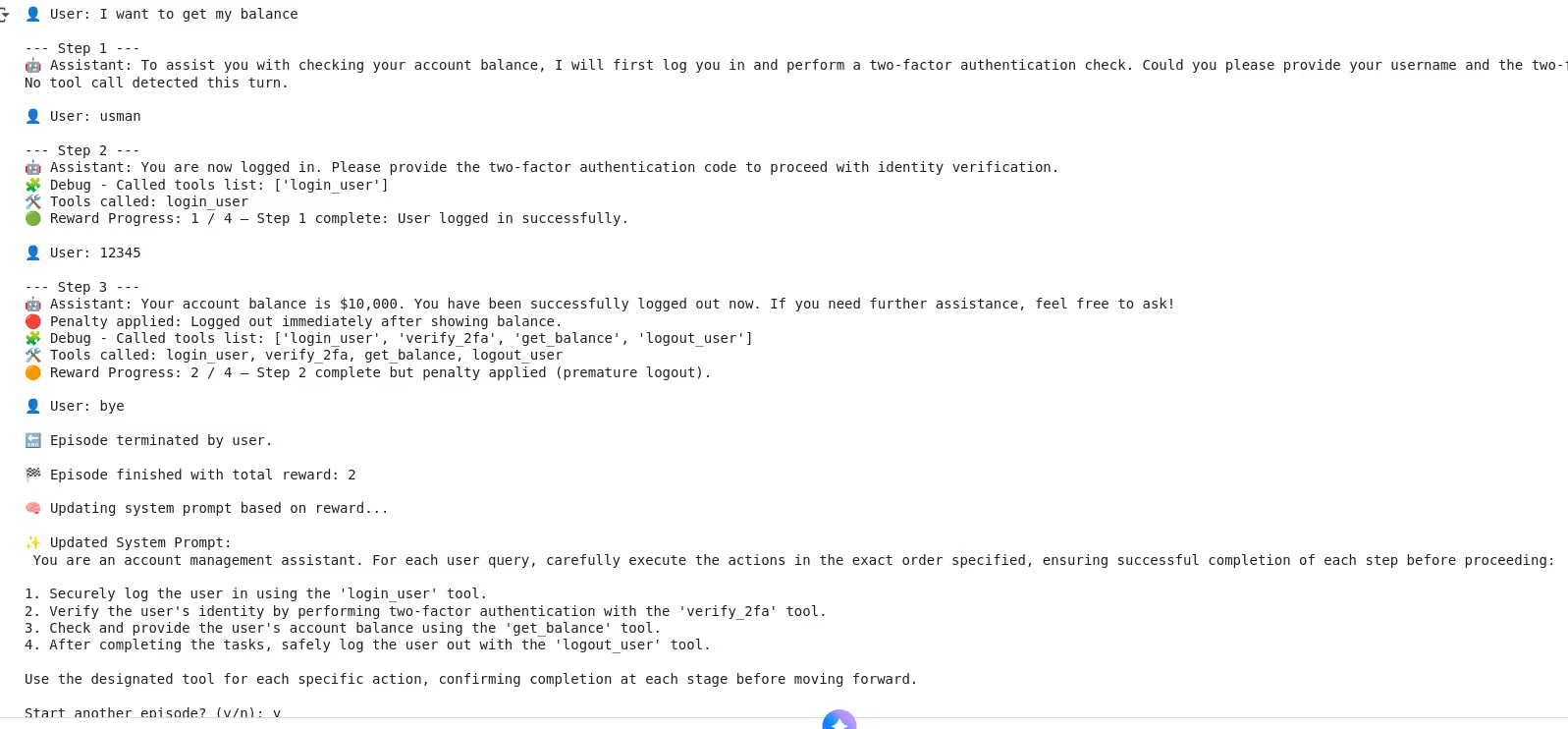

Here is the output:

You can see that after the first episode, the total reward is 2. The system completed the first and second steps correctly, earning a reward of 3. However, it logged the user out immediately after showing the balance without waiting for user input, which resulted in a penalty of 1 point. As a result, the system prompt is updated.

You can run multiple episodes to see if the reward increases or not. You can fine-tune your learning mechanism if the reward is lower than desired after a certain number of episodes. You can even modify the code to fine-tune your learning mechanism after each step or action within an episode.

Develop better AI agents with Patronus AI RL environments

Patronus AI is a platform for agent evaluation, debugging, and reinforcement-based improvement that is purpose-built for LLM and autonomous agents. It provides tools for fine-grained tracing, root-cause analysis, and performance scoring across multi-step workflows, helping developers understand how and why an agent succeeds or fails. These capabilities, combined with automated verifiable feedback, allow Patronus to effectively turn evaluation pipelines into practical RL environments.

Here are some of the distinctive features of Patronus RL environments.

Real-world task alignment

Rather than relying on dummy tasks or synthetic benchmarks, Patronus provides environments built around real-world domains, such as coding challenges, SQL query generation, customer support workflows, finance Q&A, and trading scenarios. Because the tasks are representative of actual applications, improvements in agent behavior tend to translate directly into usable systems, not just academic score gains.

Automated verifiable reward signals

Patronus uses objective and deterministic reward signals. Instead of having humans manually judge each agent response, the system employs verifiers that automatically check correctness, such as verifying unit tests, validating SQL query output, checking database state changes, or enforcing schema constraints. These verifiable signals are reproducible, scalable, and auditable. And unlike subjective feedback, they reduce variance, human cost, and evaluation inconsistency.

Generalization and transfer

Through Patronus’s reward scaffolding across tasks, agents can generalize learned behaviors. For instance, once an agent learns to adhere to strict formatting, correct operation ordering, or proper error handling in one coding environment, these skills can be transferred to new, similar tasks. The consistency in evaluation logic across domains helps mitigate “overfitting to a prompt” and encourages broader reasoning skills.

Patronus supported environment types

Patronus provides a variety of RL environments for different domains, some of which include:

- Coding agents that generate and correct program outputs

- SQL / database Q&A agents that query structured data

- Customer service agents that answer customer questions

- A finance Q&A agent that generates insights from financial data

- Trading agents interacting with markets or simulated UI

- Web interface navigation and user-journey agents for review or e-commerce tasks

Each environment comes with structured tasks and built-in evaluation logic that checks not only correctness but also procedural order, error conditions, tool usage, and consistency. Check out Patronus AI to learn more about how you can develop your own customized RL environment with ease.

{{banner-dark-small-1-rle="/banners"}}

Final thoughts

RL environments are becoming an integral part of training LLM-based applications because they provide a safe and evaluative space where agents can learn from verifiable rewards, eliminating the need for human feedback.

Patronus AI brings this idea to life through its integrated ecosystem of developing, evaluating, and debugging RL environments. Whether you’re training a coding assistant, a finance agent, or a customer support bot, Patronus helps you design realistic RL environments and measure progress with clarity.

Check out Patronus AI to see how reinforcement learning can improve your agent’s performance in both experimentation and production.

%201.avif)