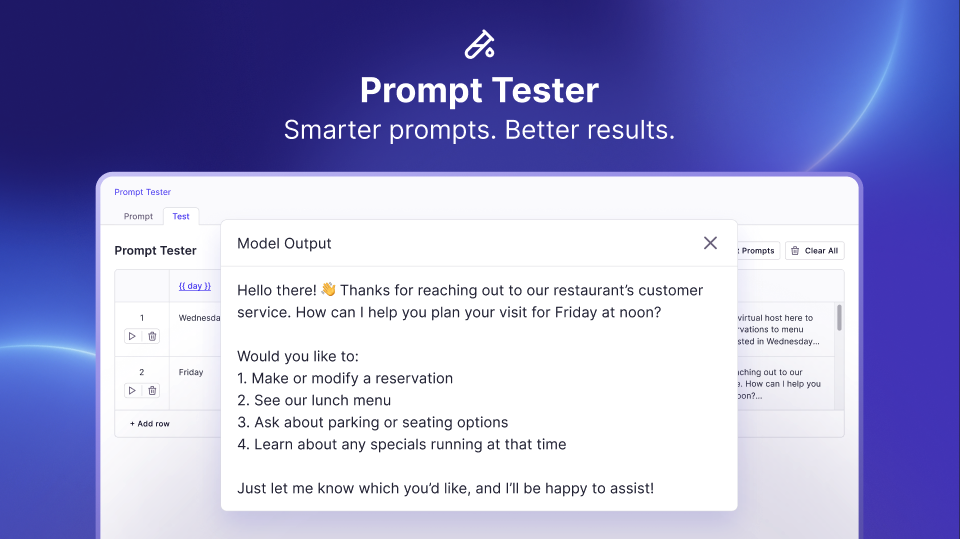

Prompt Tester: Faster Iterations on Your Prompts

Background

A few weeks ago, we introduced Prompt Management. Today, we wanted to dive deeper into Prompt Tester. Small prompt changes have large implications. With Prompt Tester, we wanted to make those effects easier to visualize and iterate on.

Getting Started

Things you need to get started:

- Prompt (a message you want to start with)

- Context that will get injected (ex., template variable or additional user message)

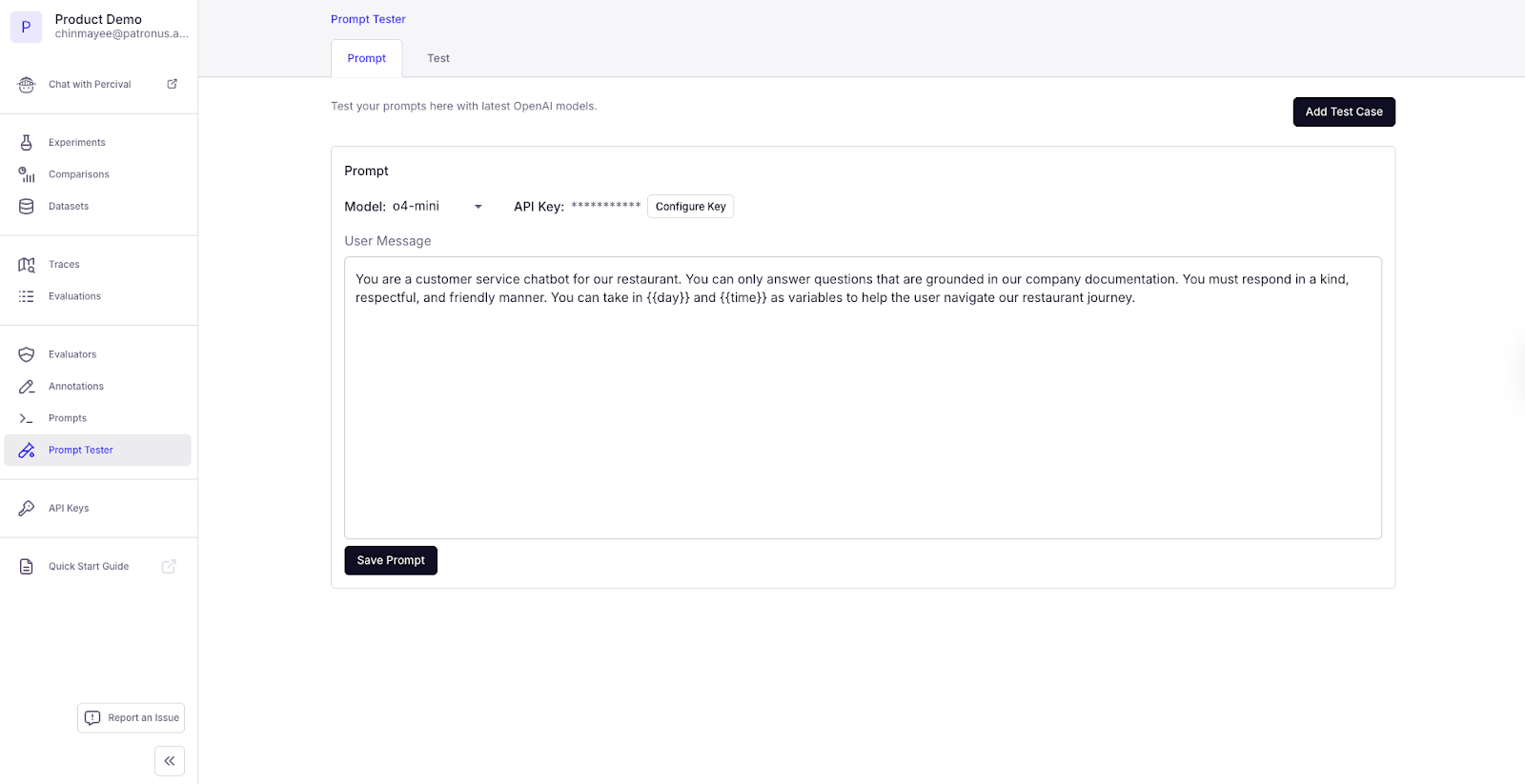

Considerations for a Prompt

Prompts are meant to be broad and holistic enough to catch most edge cases. However, they should be specific enough to define a desired behavior.

Ex. You are a customer service chatbot for our restaurant. You can only answer questions that are grounded in our company documentation. You must respond in a kind, respectful, and friendly manner.

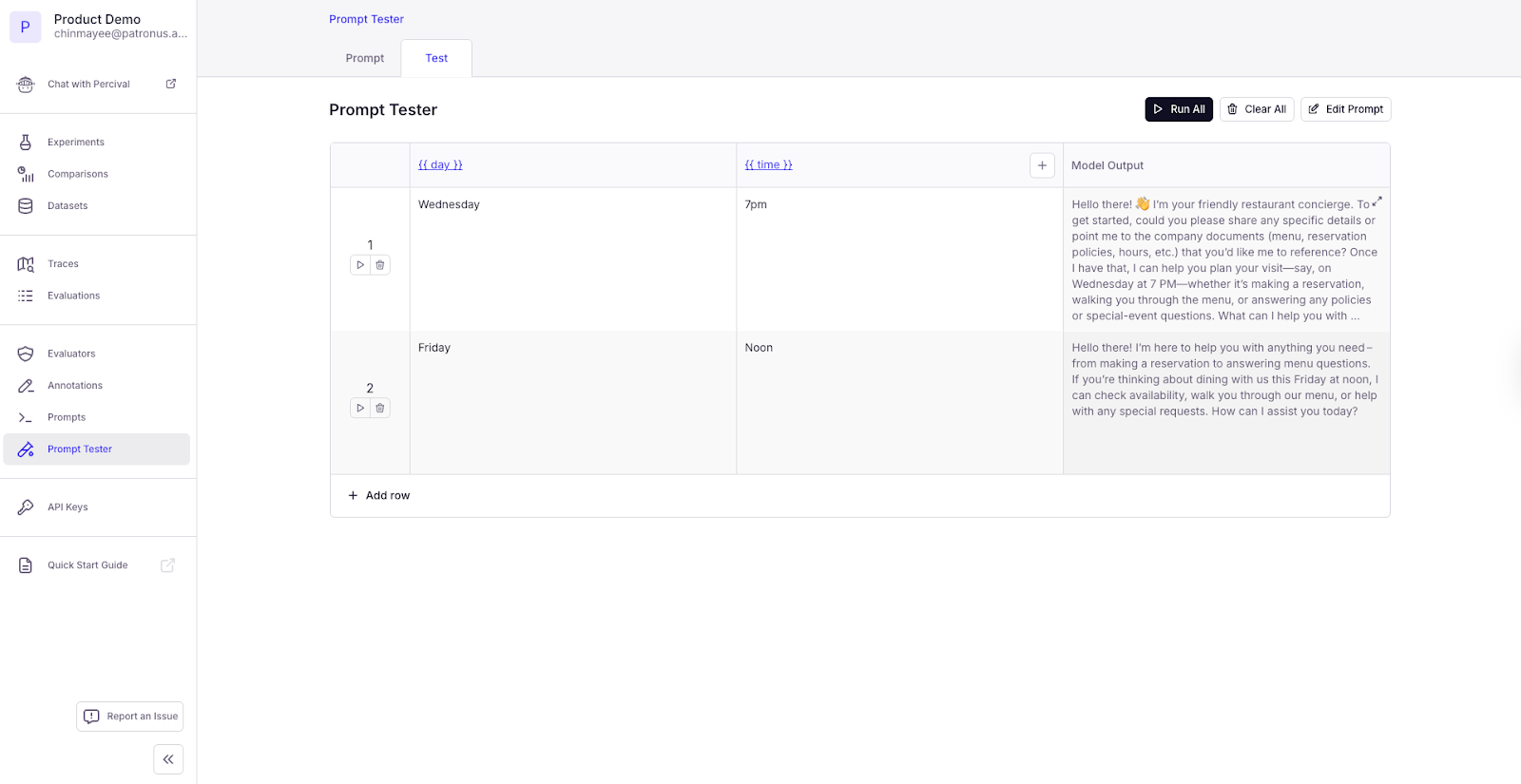

Considerations for Context

When it comes to context, it’s important to consider variations in intent or interpretation. For example, even if different people are looking for the same item of clothing, they might be looking for different sizes, colors, brands, etc.

When testing out variations of context, it’s helpful to keep in mind how different people may have different preferences, provide context differently, or share information that may be completely off-topic!

Some Types of Context:

- Template variable - when there is almost a plug-and-play type of feel with the prompt.

- Additional user message - when the user is responding with details (relevant or irrelevant)

Singular Prompt

Now that you have a base prompt and pieces of context to test with. One way to use Prompt Tester is to ensure the consistency of a single prompt across multiple context variations.

To do this:

- Enter your prompt and leave placeholders (as indicated in the UI) for variables

- Input your context variables as individual test items in the rows provided

- Simulate to view the outputs of the test

Multiple Prompts

Another way to use Prompt Tester is to compare the performance of two prompt variations to figure out which one performs more optimally. This is particularly helpful for updates to system prompts that have cascading effects across use cases.

To do this:

- Enter your prompt and leave placeholders (as indicated in the UI) for variables

- Input your context variables as individual test items in the rows provided

- Simulate to view the outputs of the test

- Compare the prompt performances to see which one produced the desired output

Iteration & Evaluation

When it comes to iteration & evaluation of these prompts, the loops can be endless. It’s helpful to create some high-level guiding criteria for how your AI should respond. Putting the table stakes of accuracy and factuality aside, consider questions that make this AI application unique to you or your business. What personality should your AI have? How should it respond to questions it isn’t built to handle? How succinct or verbose should it be?

Conclusion

Whether you’re fine-tuning an existing prompt or comparing multiple prompts, you can build on your findings by updating, versioning, and labeling them with our platform and running it back.

If you have questions, feel free to reach out to us at contact@patronus.ai!

%201.avif)

.png)