Powerful

AI Evaluation

The best way to ship top-tier AI products. Based on industry-leading AI research and tools

Explore the Patronus Product

Our Core Eval Platform

Evaluators, Experiments, Logs Comparisons, Datasets, Traces

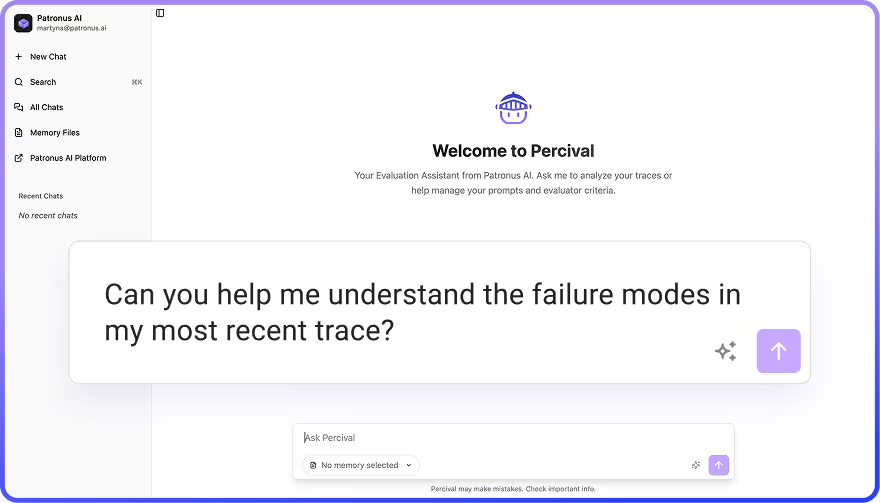

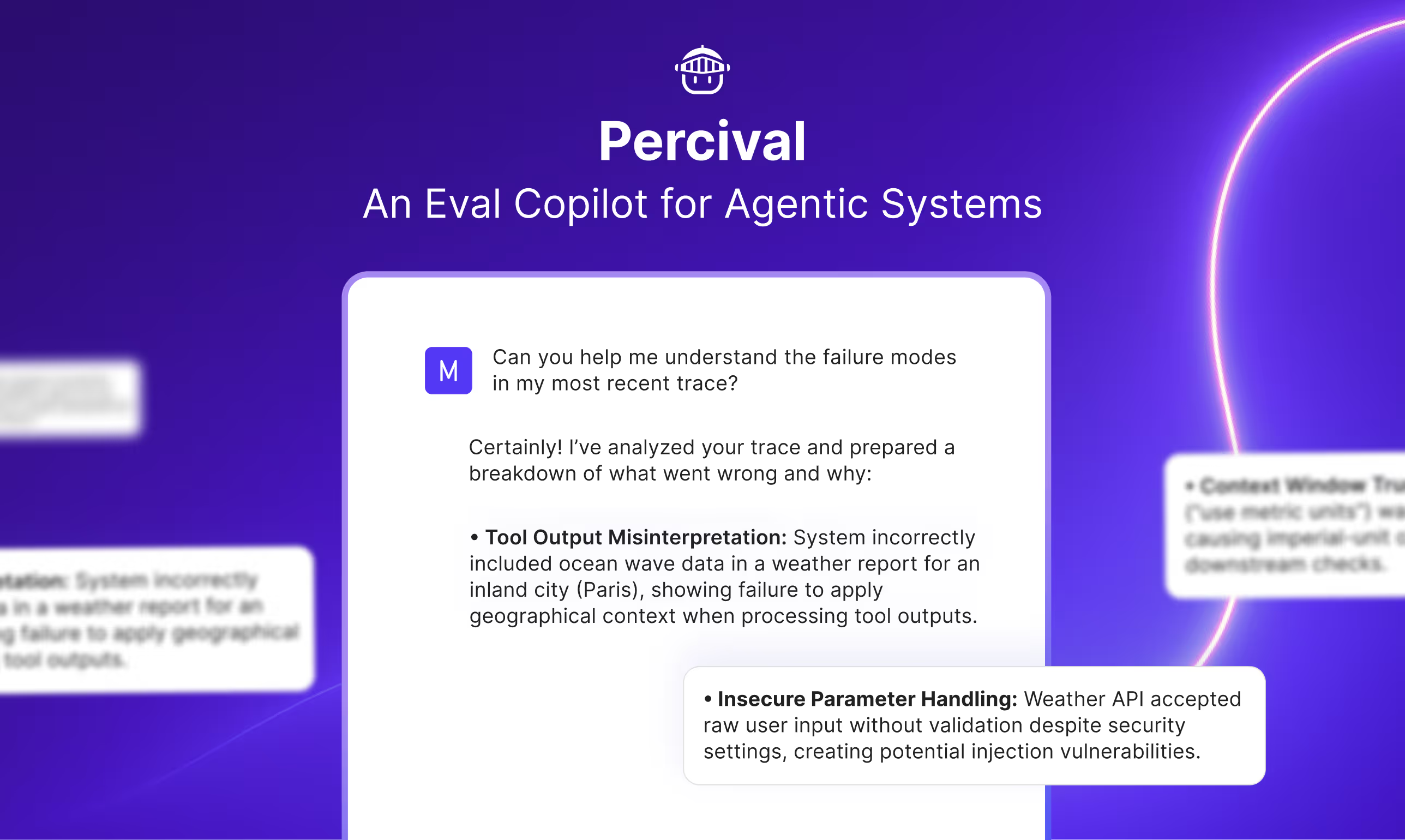

Percival

An Eval Copilot for Agentic Systems. Percival helps you analyze complex traces and identify 20+ agentic failure modes

RL Environments

Dynamic, feedback-driven environments for domain-specific agent training and evaluation

Discover our Areas of Experience

We have experience evaluating support responses to prevent hallucinations, maintain tone, develop guardrails, and understand assumptions

“Automated prompt fixes are awesome — it plugs straight into our revision cycle. Eventually, I’ll probably just feed the suggested prompt edits into a ‘revise my prompt’ prompt. It’s like infinite prompt recursion, and I kind of love it”

— Paul Modderman, Founding Engineer

Enhance and Govern Self-Generating Agents at Scale

Case Study

“Emergence’s recent breakthrough—agents creating agents—marks a pivotal moment not only in the evolution of adaptive, self-generating systems (...) which is precisely why we are collaborating with Patronus AI.”

— Satya Nitta, Co-founder and CEO

"It is an extremely helpful and interesting tool for building Agent systems. I think the best compliment that I can give as a developer is that this really made me feel empowered to test out a new idea."

— Developer at Weaviate

One key use case the Etsy AI team is leveraging generative AI for is autogenerating captions on product images to speed up listing. However, they kept running into quality issues — the captions often contained errors and unexpected outputs.

"Patronus helped us uncover error patterns and optimize AI outputs (...)

It became an invaluable part of our evaluation workflow."

— Jon Noronha, Co-Founder

Research-first benefits

Lynx

FinanceBench

BLUR

GLIDER

Our latest update

Percival Chat: An Eval Copilot for Agentic Systems

Chat, your eval co-pilot, aims to simplify the agent evaluation process even more by providing you with in-context guidance on tracing, integrations, evaluation criteria, and prompting.

%201.avif)